Understanding Accuracy, Precision, Recall, Specificity, and F1 Score in Model Evaluation

Table of Contents

- Introduction to Model Evaluation Metrics

- Confusion Matrix: The Foundation

- Accuracy: The Starting Point

- Precision: Measuring Exactness

- Recall (Sensitivity): Measuring Completeness

- Specificity: The Underappreciated Metric

- F1 Score: Balancing Precision and Recall

- Choosing the Right Metric for Your Model

- Leveraging Scikit-Learn for Metric Calculation

- Conclusion

Introduction to Model Evaluation Metrics

When developing classification models, it’s crucial to assess how well your model performs beyond just overall accuracy. Different metrics provide insights into various aspects of your model’s performance, helping you make informed decisions based on the specific needs of your application.

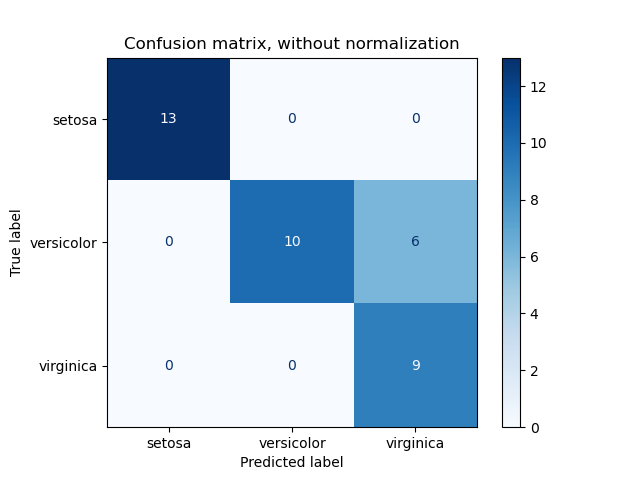

Confusion Matrix: The Foundation

A Confusion Matrix is a table that allows visualization of the performance of a classification model. It compares the actual target values with those predicted by the model. For binary classification, the confusion matrix is a 2×2 matrix containing:

- True Positives (TP): Correctly predicted positive instances.

- False Positives (FP): Incorrectly predicted positive instances.

- True Negatives (TN): Correctly predicted negative instances.

- False Negatives (FN): Incorrectly predicted negative instances.

Understanding these components is essential as they form the basis for calculating various performance metrics.

Accuracy: The Starting Point

Accuracy is the most straightforward metric, representing the percentage of total correct predictions out of all predictions made.

Formula:

\[ \text{Accuracy} = \frac{TP + TN}{TP + FP + FN + TN} \]

Example:

Consider a scenario where a model predicts 10 true positives, 9 true negatives, and has a few false predictions:

\[ \text{Accuracy} = \frac{10 + 9}{10 + 9 + 0 + 0} = 97.5\% \]

While an accuracy of 97.5% seems impressive, it’s essential to recognize its limitations, especially in cases of imbalanced datasets where one class significantly outnumbers the other.

Precision: Measuring Exactness

Precision assesses how many of the positively predicted instances are actually correct. It answers the question: When the model predicts a positive class, how often is it correct?

Formula:

\[ \text{Precision} = \frac{TP}{TP + FP} \]

Example:

Using the same model:

\[ \text{Precision} = \frac{10}{10 + 250} = 3.8\% \]

A low precision indicates a high number of false positives, which can be problematic in applications where false alarms are costly.

Importance of Precision:

Precision is crucial in scenarios where the cost of false positives is high, such as spam detection or medical diagnostics.

Recall (Sensitivity): Measuring Completeness

Recall, also known as Sensitivity, measures the model’s ability to identify all relevant instances. It answers the question: Of all actual positive instances, how many did the model correctly identify?

Formula:

\[ \text{Recall} = \frac{TP}{TP + FN} \]

Example:

\[ \text{Recall} = \frac{10}{10 + 5} = 66.6\% \]

A higher recall indicates that the model is capturing a larger portion of the positive class, which is desirable in applications like disease screening.

Importance of Recall:

Recall is vital in situations where missing a positive instance has severe consequences, such as in disease detection or security threat identification.

Specificity: The Underappreciated Metric

Specificity measures the proportion of actual negatives that are correctly identified. It answers the question: Of all actual negative instances, how many did the model correctly recognize?

Formula:

\[ \text{Specificity} = \frac{TN}{TN + FP} \]

Example:

\[ \text{Specificity} = \frac{9990}{9990 + 250} = 97.55\% \]

High specificity indicates that the model is effective at identifying negative instances, which is crucial in scenarios where false negatives are particularly undesirable.

Importance of Specificity:

Specificity is essential in applications where accurately identifying the negative class is critical, such as in fraud detection or non-disease (healthy) classifications.

F1 Score: Balancing Precision and Recall

The F1 Score is the harmonic mean of precision and recall, providing a single metric that balances both concerns. Unlike the arithmetic mean, the F1 Score accounts for the trade-off between precision and recall.

Formula:

\[ \text{F1 Score} = 2 \times \frac{\text{Precision} \times \text{Recall}}{\text{Precision} + \text{Recall}} \]

Example:

\[ \text{F1 Score} = 2 \times \frac{0.038 \times 0.666}{0.038 + 0.666} \approx 7.1\% \]

The F1 Score is particularly useful when you need a balance between precision and recall and when there is an uneven class distribution.

Importance of F1 Score:

The F1 Score is ideal for situations where both false positives and false negatives are important to minimize, providing a more nuanced view of model performance.

Choosing the Right Metric for Your Model

Selecting the appropriate evaluation metric depends on the specific requirements and context of your project:

- Use Accuracy when the classes are balanced and all errors are equally costly.

- Use Precision when the cost of false positives is high.

- Use Recall when the cost of false negatives is high.

- Use Specificity when correctly identifying the negative class is crucial.

- Use F1 Score when you need a balance between precision and recall, especially in imbalanced datasets.

Understanding these metrics ensures that you choose the right one to align with your project’s goals and constraints.

Leveraging Scikit-Learn for Metric Calculation

Manually calculating these metrics can be tedious and error-prone, especially for more complex models. Fortunately, Python’s Scikit-Learn library offers robust tools to compute these metrics effortlessly.

Useful Scikit-Learn Functions:

accuracy_score: Calculates the accuracy.precision_score: Calculates precision.recall_score: Calculates recall.f1_score: Calculates the F1 Score.confusion_matrix: Generates the confusion matrix.classification_report: Provides a detailed report including all the above metrics.

Example:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

from sklearn.metrics import confusion_matrix, accuracy_score, precision_score, recall_score, f1_score # Assume y_true and y_pred are your actual and predicted labels conf_matrix = confusion_matrix(y_true, y_pred) accuracy = accuracy_score(y_true, y_pred) precision = precision_score(y_true, y_pred) recall = recall_score(y_true, y_pred) f1 = f1_score(y_true, y_pred) print("Confusion Matrix:\n", conf_matrix) print(f"Accuracy: {accuracy * 100:.2f}%") print(f"Precision: {precision * 100:.2f}%") print(f"Recall: {recall * 100:.2f}%") print(f"F1 Score: {f1 * 100:.2f}%") |

For more advanced metric calculations, including specificity, you can utilize the classification_report or explore additional Scikit-Learn resources:

Conclusion

Evaluating a classification model’s performance requires a nuanced approach that goes beyond mere accuracy. By understanding and leveraging metrics like Precision, Recall, Specificity, and the F1 Score, you gain deeper insights into your model’s strengths and weaknesses. This comprehensive evaluation ensures that your model performs optimally in real-world scenarios, aligning with your project’s specific goals and requirements.

Remember, the choice of metric should always be guided by the context of your application. Utilize tools like Scikit-Learn to streamline this process, allowing you to focus on refining your models for the best possible outcomes.

Keywords: Accuracy, Precision, Recall, Specificity, F1 Score, Confusion Matrix, Model Evaluation, Machine Learning Metrics, Scikit-Learn, Classification Model Performance.